Testing in New York: Lessons to Be Learned

New York is currently facing a challenge. The scores from a state standard exam that is supposed to be aligned with the Common Core are not pretty. The New York Times reports:

...In New York City, 26 percent of students in third through eighth grade passed the tests in English, and 30 percent passed in math, according to the New York State Education Department.

The exams were some of the first in the nation to be aligned with a more rigorous set of standards known as the Common Core, which emphasize deep analysis and creative problem-solving over short answers and memorization. Last year, under an easier test, 47 percent of city students passed in English, and 60 percent in math.

City and state officials spent months trying to steel the public for the grim figures.

But when the results were released, many educators responded with shock that their students measured up so poorly against the new yardsticks of achievement....

|

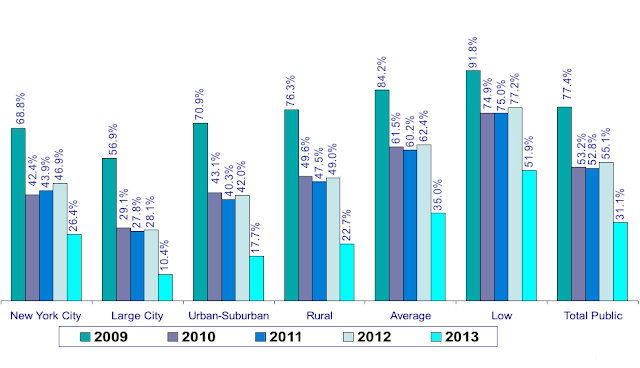

| English Scores Above figure downloaded from http://www.p12.nysed.gov/irs/ela-math/2013/2013-08-06FINALELAandMathPRESENTATIONDECK_v2.ppt "Low corresponds to schools in affluent communities" |

|

| Math Scores Above figure downloaded from http://www.p12.nysed.gov/irs/ela-math/2013/2013-08-06FINALELAandMathPRESENTATIONDECK_v2.ppt "Low corresponds to schools in affluent communities" |

The results as shown above suggest information that has long been known. "Lower-need communities are outperforming other areas in the state." This is exactly the same trend seen in the other exams. Schools that performed well in previous exams also have higher scores in the new one. There is nothing controversial here as poverty has long been recognized as an important factor in education outcomes. The new exam scores also continue to highlight the achievement gap across ethnic groups:

|

| English Scores Above figure downloaded from http://www.p12.nysed.gov/irs/ela-math/2013/2013-08-06FINALELAandMathPRESENTATIONDECK_v2.ppt |

|

| Math Scores Above figure downloaded from http://www.p12.nysed.gov/irs/ela-math/2013/2013-08-06FINALELAandMathPRESENTATIONDECK_v2.ppt |

What is particularly interesting here is the difference between the officials and the educators. Officials somehow have expected the drop in scores as illustrated in a memo written by Ken Slentz, Deputy Commissioner, Office of P-12 Education:

...Only 30.9% of the 2006 cohort graduated with a Regents diploma with Advanced Designation, and only 36.7% of the graduates in the cohort scored at least 75 and 80 on their English and math Regents exams, respectively (these Regents exam cut scores are considered to be the minimum necessary for college-readiness). These high school results are consistent with New York’s elementary and middle school scores on NAEP: for the 2011 school year, only 30% and 35% of New York’s Grade 8 students scored proficient on the NAEP in math and reading, respectively. These sobering high school outcomes make it even more important that our new Grades 3-8 ELA and math Common Core assessments provide educators and parents with early indicators of the trajectory to college- and career-readiness long before our students enter high school.The percentages of students cited above (around 30%) are in fact very close to the scores in the new exam. The Common Core is supposed to be a more rigorous set of standards. The bigger surprise would have been students doing very well in these exams especially if the new curriculum has yet to be ironed out and fully implemented.

Why the negative reaction? Perhaps, the last two paragraphs of the article quoting US Secretary of Education, Arne Duncan, may help answer this question:

Speaking with reporters, Mr. Duncan said the shift to the Common Core standards was a necessary recalibration that would better prepare students for college and the work force.

“Too many school systems lied to children, families and communities,” Mr. Duncan said. “Finally, we are holding ourselves accountable as educators.”The first part sounds innocuous. "Recalibration that would better prepare students for college and the work force" should not ruffle anyone's feathers. But the second part is dramatically different. First, it accuses schools of lying. Then, it puts the exam as a way to "hold educators accountable". This is where the problem starts.

Some of the exam questions are available to the public. A sampling of Grade 3 questions in math, for example, can be downloaded from the New York State Education Department website. A group of anonymous mathematicians have in fact criticized some of the questions asked on their blog, CCSSI Mathematics. The following is an example:

Grade 3

The problem here is one of language, particularly the misuse of nouns and descriptors. The set-up question refers to “objects” in order to cover the nouns in all the various scenarios, which include: boxes, pencils, people, buses, marbles, groups, books and shelves.Another example which illustrates not only problems in language but errors in math is the following:

Within each answer choice, the student must decide between two nouns which “object” the set-up would be referring to. We’d be hard-pressed to apply the term “objects” to people, but it’s equally inapt for shelves and buses, so we could eliminate choice B simply because there are no “objects”. Luckily, it’s not the answer that received credit.

The problem with credited answer C is that the word “objects” isn’t really suitable for “groups”, either.

There are two nouns, marbles and groups, and the phrase, “There are 24 marbles that need to be sorted into 4 equal groups”, is not explicitly followed by an unambiguous and grade-appropriate “How many groups are there?” In order to find that question, one must return to the set-up, the phrasing of which could be distilled into “the number of objects represented by 24 ÷ 4.” The gap remains that students must infer the question is asking for the number of groups as applied to choice C. Setting out the task of making that language inference is not what the Common Core standard is supposed to test. This is a math test.

Although we understand the intent of this problem and the underlying math is sound, the problem is heavily biased against students with language weaknesses because it requires inferences and fairly advanced interpretive skills, especially for Grade 3.

Grade 6

Here is an example of bad mathematics.Developing tests is a difficult task. It requires peer review because oftentimes test writers are so immersed inside the box that they could be taking things for granted. When I served as a committee member of the Educational Testing Service (ETS) for the Graduate Record Examination in chemistry, each test question needs to be unanimously approved by an eight member committee. These vote only happen after several rounds of review of each question by several professors from other universities. This only addresses the correctness of a single question. The entire exam needs to be gauged according to balance, coverage and difficulty and each question must have discriminating ability, and at the same time, must correlate with other questions in terms of which students get the most answers correctly. It is therefore required that a new exam be tested first.

While simplifying the expression in choice A arrives at the correct distance, 9, one does not calculate a distance by taking absolute values and adding them. One takes the absolute value of the difference in values. Thus, the correct expression would be either | 3 – (–6) | or | (–6) – 3 |.

If the points were instead A(–6, 4) and B(–3,4), applying NYSED’s "method", one would get |–6|+|–3|=9, which is wrong.

I give tests in the classes that I teach. I do not have the same resources as the ETS but I draw a lot from experience as well as from exams or problems written by other instructors. In the end, what really matters is what one does with the test results. This is really where the two camps diverge, as Willingham describes in his blog article, "How to make edu-blogging less boring":

Testing in New York should go beyond testing. But it should not go into a territory tests are not meant for. Testing in New York should instead provide lessons to be learned. We still have a long a way to go to improve education in terms of both excellence and equity....

- People who are angry about the unintended social consequences of standardized testing have a legitimate point. They are not all apologists for lazy teachers or advocates of the status quo. Calling for high-stakes testing while taking no account of these social consequences, offering no solution to the problem . . . that's boring.

- People who insist on standardized assessments have a legitimate point. They are not all corporate stooges and teacher-haters. Deriding “bubble sheet” testing while offering no viable alternative method of assessment . . . that's boring.

Comments

Post a Comment