Should We Believe Educational Research?

One of the questions I asked in a survey of learning myths is this:

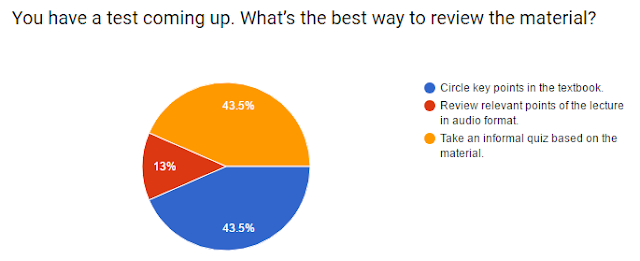

You have a test coming up. What’s the best way to review the material?

- Circle key points in the textbook.

- Review relevant points of the lecture in audio format.

- Take an informal quiz based on the material.

The responses I have received so far draw the following picture:

It appears to be a tie between highlighting parts of a textbook and taking a practice exam. Educational research is quite clear with regard to this question. Taking practice tests appears to be one of the most effective ways to learn as seen in the following figure:

The difference is clear, students who took practice tests perform better than those who simply restudied. The positive effect of testing on learning has also been proclaimed in an article by Annie Murphy Paul in Scientific American:

|

| Above copied from Scientific American |

Dunlosky and coworkers, the authors of the article in Psychological Science in the Public Interest, however, still expressed some caution regarding these findings:

"Regarding recommendations for future research, one gap identified in the literature concerns the extent to which the benefits of practice testing depend on learners’ characteristics, such as prior knowledge or ability. Exploring individual differences in testing effects would align well with the aim to identify the broader generalizability of the benefits of practice testing."Studies on testing effects have yet to employ controls for students' characteristics. A recent paper on testing effects in fact still mentions this limitation:

"One methodological point is worth revisiting: The inability to randomly assign participants to conditions poses a potential limitation to our conclusions."The research article, published in the Journal of Educational Psychology, talks about studies that took place during four semesters in college. For each semester, an experiment is performed in a general psychology class. The four studies are as follows:

Study 1: A standard testing section (two midterms and two pop quizzes) versus a frequent testing section (four midterms, four pop quizzes)

Study 2: A standard testing section (two midterms and two pop quizzes) versus a frequent testing section (eight short in-course exams)

Study 3: A standard testing section (two midterms and two pop quizzes, plus unannounced low-stakes quizzes) versus a frequent testing section (eight short in-course exams plus unannounced low-stakes quizzes)

Study 4: A standard testing section (two midterms and two pop quizzes, plus announced ungraded quizzes) versus a frequent testing section (eight short in-course exams plus announced ungraded quizzes)

The results are summarized in the observed performance of the students in the final exam:

There is a marked difference between the above recent results and those presented in Butler (2010). One should keep in mind, however, that in the recent study, the comparison is not really between practice testing and restudy, but between taking two exams versus four or eight exams before the final exam. Thus, there is testing even in the standard format. Practice tests are therefore highly beneficial, but the effect of how often tests are given is much smaller. The two studies are therefore very different and one should not automatically add "frequent" just because practice testing has been found to improve learning. It is also important to note that in Butler (2010) what was employed was "repeated testing" and not just "frequent testing".

Comments

Post a Comment